Story behind this project

Founded in 2006, Twitter is the walking and talking overview of what is going on in our world right now, since quite a few years. I can not make a list, if I want to even, of all the areas which Twitter hosts information upon; let us decide on, almost everything which a person can even think of and a lot (x3) more. And since when Machine Learning has started being a coffee table discussion for enthusiasts, a feature of ML me and another project contributor, Debanik Banerjee could exploit was Sentimental Analysis over texts, obviously with the help of Python, a Twitter API to fetch tweet texts and some open source packages already created by developers (all of which will be mentioned below for reference).

Project Details

You can find the code for this project at GitHub here and can fork or star it from the buttons above.

Packages used

All the packages used in this project are open-source and are available at PyPi.

- TextBlob - For sentimentally analysing the tweets.

- Folium - For creating a Leaflet map from location parameters.

- Tweepy - An open source API for fetching twitter data.

- Geopy - For geocoding the location name to latitude and longitude pairs.

- Tweet-Preprocessor - For cleaning the tweets.

Working of the script

The code opens with the import statements to import all the packages which I have used for this.

import folium

import tweepy

from textblob import TextBlob

import preprocessor as p

from geopy.geocoders import Nominatim

A Twitter developer account is necessary to integrate with Tweepy, for fetching the tweets. When you sign up for a developer account, four authentication values are provided to authenticate the API, while using inside the script. The values should be put like this as different variables inside the Python script.

consumer_key = "<Your Consumer Key Goes Here>"

consumer_secret = "<Your Consumer Secret Token Goes Here>"

access_token = "<Your Access Token Goes Here>"

access_secret = "<Your Access Secret Token Goes Here>"

Tweepy now authenticates these values and retrieves the permission from Twitter to fetch live tweets via these lines.

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_token, access_secret)

api = tweepy.API(auth,wait_on_rate_limit=True)

Two inputs are asked from the user. One is the hashtag (a String) against which the tweets are to be fetched and another is a limiting value (an integer), because we don’t want the fetching process to go on forever.

hashtag = raw_input("Input the hashtag for which tweets are to be extracted: ")

limiting_value=int(raw_input("Input the number of live tweets that are to be extracted: "))

Two variables are declared. The mapvar variable creates an instance of the folium.Map class, with a predefined location pair value and a zoom_start value. The location given here is arbitrary and can be replaced with any valid lat-long pair as the zoom_start value is 1, so we basically get a much zoomed out map initially. The geolocator variable also creates an instance of the Nominatim class from geopy.geocoders.

mapvar=folium.Map(location=[45.38,-121.67],zoom_start=1)

geolocator = Nominatim()

We start the process for fetching of tweets, only the English ones.

for tweet in tweepy.Cursor(api.search,q=hashtag,lang="en").items():

Unnecessary symbols and emojis are trimmed both from the tweet text and the tweet location by a special class clean from the preprocessor package.

tweet_location=p.clean(tweet.user.location.encode('ascii','ignore'))

tweet_text=p.clean(tweet.text.encode('ascii','ignore'))[2:]

The location name is fed into the geolocator object, which returns a lat-long pair for valid locations. And also a feeling index (a positive value for positive tweets and a negative value for negative ones) value is also obtained by feeding the tweet_text into TextBlob.

location = geolocator.geocode(tweet_location)

feelings=TextBlob(tweet_text).sentiment.polarity

The feelings variable is checked for its value and a corresponding positive (green) or a negative (red) color is associated to its marker.

if feelings>=0:

icon=folium.Icon(color='green')

elif feelings<0:

icon=folium.Icon(color='red')

Each of these markers is then glued to the Leaflet map using folium.Marker with the corresponding latitude and longitude positional parameter.

folium.Marker(location=[location.latitude,location.longitude],icon=icon).add_to(mapvar)

We then break the loop if our limiting_value is reached.

if i==limiting_value:

break

After the whole loop is completed, we take up the folium map as the output and convert it into an HTML file, which can then be opened in any browser on any computer and the desired map is displayed.

mapvar.save('twittermap.html')

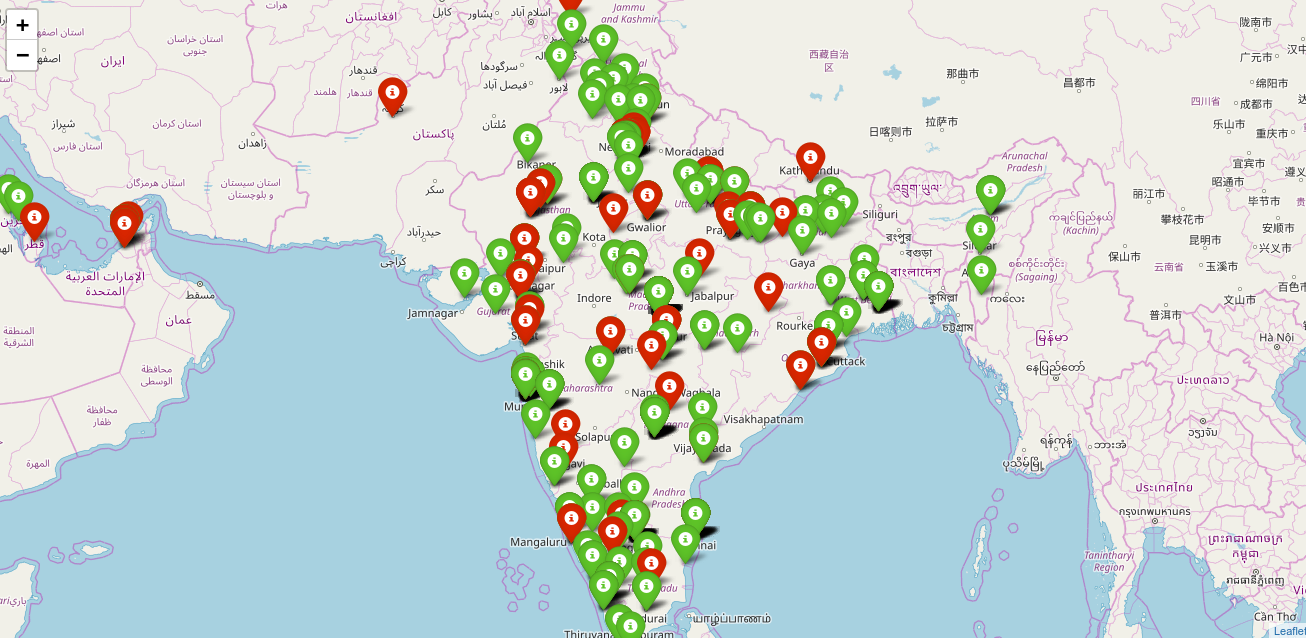

Below is the output obtained from inputs (hashtag : BJP, limiting_value : 1000). For reference, Bharatiya Janta Party is abbreviated by BJP, a prominent political party in India.

```